Statistics in R

Stuart Demmer

03 August 2018

Introduction

This page is an attempt to put all of what we have been learining into practice. But in order to understand what we are going to be doing here we need to have a quick recap of basic statistics. In their simplest definition many univariate parametric statistical tests (those are things like t-test, ANOVA, GLM, GLMM) are testing for whether the means from two or more treatments are stastically different from one another. They do this by calculating the proportional overlap that the treatments' distributions with the other treatments' distribution. If that proportional overlap is sufficiently low (say around 5%) then we reject the null hypothesis (the hypothesis that the distributions overlap one another too much and so we cannot say with sufficient surity that the means are really different). Otherwise if there is more that around 5% overlap we cannot reject the null hypothesis and so the means are not different.

T-test

There are several different kinds of t-tests. I’m not going to go through them all here, they all work in a similar manner. To start with we will just go with the independent samples t-test. This tests for whether the difference between the two treatment means is different from zero. If it is then the means are not the same. Let us pull up some data:

head(sleep)## extra group ID

## 1 0.7 1 1

## 2 -1.6 1 2

## 3 -0.2 1 3

## 4 -1.2 1 4

## 5 -0.1 1 5

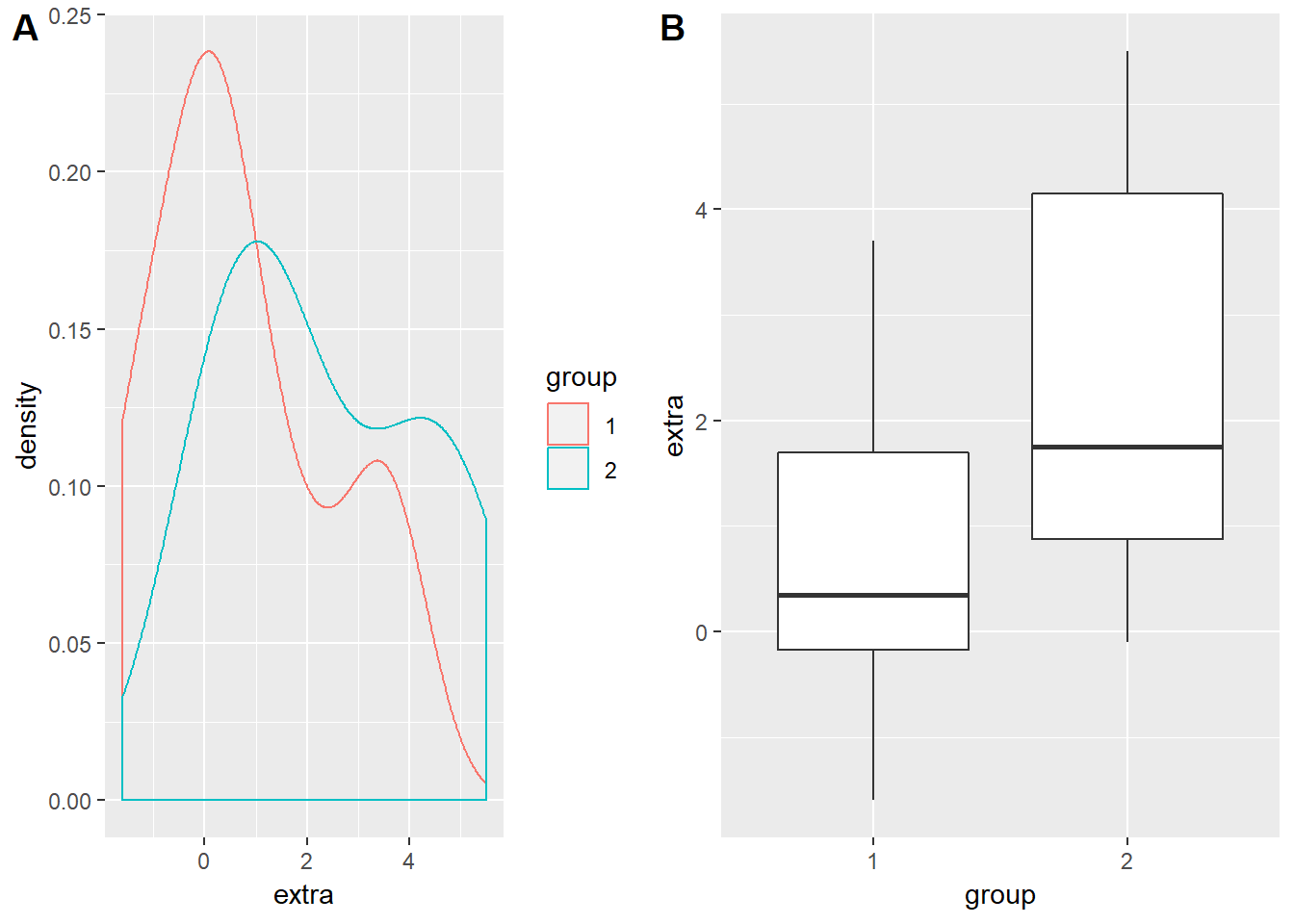

## 6 3.4 1 6This data shows the response of students to a sleeping drug. extra indicates the change in hours slept, group shows the treatment that they were given, and ID is each unique participant's number. Technically this is a paired sample (each participant's sleep is measured once before and then once after taking the sleeping pill) but we will ignore that for now. Let us plot the density distributions and boxplots of the two groups of students:

ggplot(data = sleep, aes(x = extra, colour = group)) +

geom_density()

ggplot(data = sleep, aes(x = group, y = extra)) +

geom_boxplot()

So group 2 definitely seems to gain around an hour and a half to two hours more sleep than did group 1. But this is just visual data analysis - not very statistical… To test this we can call up the t.test() function. type ?t.test to access the help file for the function. There are two methods that we can use to enter our data into the arguments of this function - the one I find the most useful is the ## S3 method for class 'formula'. This is a pretty universal way that many statistical functions require their arguments to be inputted:

test.name()- the name of the formula to carry out the required statistical calculation.

formula- the formula that the distributions follow. The response variable (the y value, what we measured) goes on the left. The factors which describe the response variable’s distribution go on the right. These two sides are sepparated by a~.

data- where the data can be found.

That is it. There are other arguments that are specific to the kind of test that can also be included in these functions. We can play around with these in a moment but for now let us run the test:

sleepTtest <- t.test(extra ~ group, data = sleep)

sleepTtest##

## Welch Two Sample t-test

##

## data: extra by group

## t = -1.8608, df = 17.776, p-value = 0.07939

## alternative hypothesis: true difference in means is not equal to 0

## 95 percent confidence interval:

## -3.3654832 0.2054832

## sample estimates:

## mean in group 1 mean in group 2

## 0.75 2.33That is a pretty neat output. Note what I did there - I saved the test as a variable called sleepTtest (I could have called it anything) and then I called that variable again. As soon as I run both those lines my output is printed out in the console. The great thing about doing it this way is that once I have saved my output it is saved - I do not have to sift through pages of output printouts looking for the test I want anymore. If I want to know what the details of the test were then I can just call sleepTtest again and I will have my answer.

Our output here indicates that the mean difference was not significant at the 5% level. But looking at the ID field we can see that the same subjects are used in either group and so the test is actually a paired samples t-test. To incorporate this new bit of information into our test we can go:

sleepTtest <- t.test(extra ~ group, data = sleep, paired = TRUE)

sleepTtest##

## Paired t-test

##

## data: extra by group

## t = -4.0621, df = 9, p-value = 0.002833

## alternative hypothesis: true difference in means is not equal to 0

## 95 percent confidence interval:

## -2.4598858 -0.7001142

## sample estimates:

## mean of the differences

## -1.58And there you have it - this sleeping pill significantly improves the students’ sleeping time by giving them an extra 1.58 hours of sleep. But in the life sciences we seldom do tests involving only two treatments and so analysis of variance is probably more relevant to us. The method is similar.

Analysis of variance

aov()

The dataset we will use here is called npk. In this experiment peas were fertilised with combinations of nitrogen, phosphorus, and potassium using a factorial disign:

head(npk)## block N P K yield

## 1 1 0 1 1 49.5

## 2 1 1 1 0 62.8

## 3 1 0 0 0 46.8

## 4 1 1 0 1 57.0

## 5 2 1 0 0 59.8

## 6 2 1 1 1 58.5So we have three treatment variables with one blocking variable. This is a standard agricultural or greenhouse experiment. To test for whether the means of the treatments are different we would follow a similar proceedure. We will use aov() to conduct the analysis and then in order to produce the output analysis of variance table we will use anova(). The three most reasonable ways we could carry out this analysis are:

## the most basic model. Fertilisation as main effects. No blocking or interactions incorporated.

npkAov <- aov(yield ~ N + P + K, data = npk)

## blocking has now been included.

npkAov <- aov(yield ~ N + P + K + block, data = npk)

## both interactions and blocking have been incorporated into our model

npkAov <- aov(yield ~ N*P*K + block, data = npk)anova()

Having done that we need to call a separate function to produce our analysis of variance table. anova() produces anova tables for aov(), lm() (linear models), glm() (generalised linear models) and (g)lmer() (linear or generalised linear mixed models). To get the output all we need to do is:

anova(npkAov)## Analysis of Variance Table

##

## Response: yield

## Df Sum Sq Mean Sq F value Pr(>F)

## block 5 343.29 68.659 4.4467 0.015939 *

## N 1 189.28 189.282 12.2587 0.004372 **

## P 1 8.40 8.402 0.5441 0.474904

## K 1 95.20 95.202 6.1657 0.028795 *

## N:P 1 21.28 21.282 1.3783 0.263165

## N:K 1 33.13 33.135 2.1460 0.168648

## P:K 1 0.48 0.482 0.0312 0.862752

## Residuals 12 185.29 15.441

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1That is a neat little output. But copying it into our document is not as easy as you might think. This output is only text - to put it into a tabular form we need to tidy it up using the tidy() from the broom package. Install broom now, load it from the library and then go:

tidyNPKAnova <- tidy(anova(npkAov))

View(tidyNPKAnova)That has now saved your anova into a neat table which you can copy into a spreadsheet program to format the lines if you like. This is the simplest way to make tables but there are whole packages (check out kable) specifically dedicated to producing publication quality tables from within R. The way you copy these tables into your spreadsheet program is that you scroll down to the bottom right corner of the table, click in there and then drag up to the top left. Then copy and paste into the spreadsheet - there are better ways but this is quick and nasty.

post hoc-ing with emmeans

After running the statistical test and noting that there are significant difference we often need see where these differences lie and plot them graphically. This particular dataset is not the greatest for doing that. I chose it because it has lost of variables and it can easily show you how to incorporate multiple variables into the formula argument. To look at post hoc comparrisons we will run a new test on the iris dataset. This contains flower morphology measurements from three species of iris:

head(iris)## Sepal.Length Sepal.Width Petal.Length Petal.Width Species

## 1 5.1 3.5 1.4 0.2 setosa

## 2 4.9 3.0 1.4 0.2 setosa

## 3 4.7 3.2 1.3 0.2 setosa

## 4 4.6 3.1 1.5 0.2 setosa

## 5 5.0 3.6 1.4 0.2 setosa

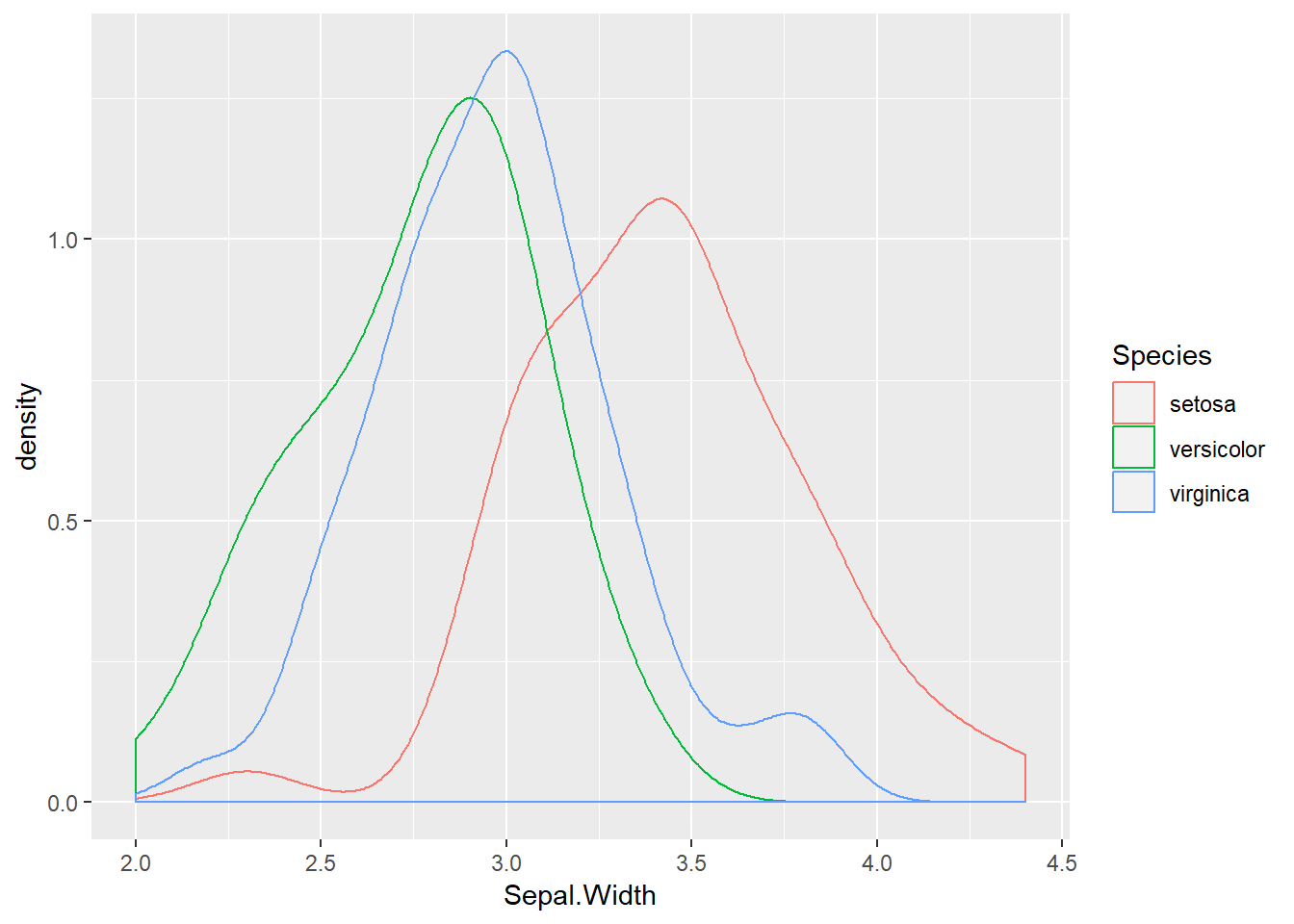

## 6 5.4 3.9 1.7 0.4 setosaLet us see if there is a difference in Sepal.Width across the three species:

ggplot(iris) +

geom_density(aes(x = Sepal.Width, colour = Species))

irisAov <- aov(Sepal.Width ~ Species, data = iris)

anova(irisAov)## Analysis of Variance Table

##

## Response: Sepal.Width

## Df Sum Sq Mean Sq F value Pr(>F)

## Species 2 11.345 5.6725 49.16 < 2.2e-16 ***

## Residuals 147 16.962 0.1154

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1An extremely significant result. It seems likely that versicolor and verginica are somewhat similar but that setosa has much wider sepals. We can extract the means for each species with emmeans. Install it and load it from your library now. This package is incredibly powerful and will be useful when we move on to GLMs in the next section. It extracts the estimated marginal means from the analysis object (irisAov in our case) and then presents them on either the analysis scale or the response scale. The developer has put together some very useful tutorials and walkthorughs (see the “Getting started” pane) Let us try it out:

irisAov <- aov(Sepal.Width ~ Species, data = iris)

irisEmm <- emmeans(irisAov, ~ Species)

irisEmm## Species emmean SE df lower.CL upper.CL

## setosa 3.428 0.0480391 147 3.333064 3.522936

## versicolor 2.770 0.0480391 147 2.675064 2.864936

## virginica 2.974 0.0480391 147 2.879064 3.068936

##

## Confidence level used: 0.95This is the most basic output but already it is very useful. We can do better though. If we want the pairwise contrasts for each treatment combination then we can go:

irisAov <- aov(Sepal.Width ~ Species, data = iris)

irisEmm <- emmeans(irisAov, ~ Species)

pairs(irisEmm)## contrast estimate SE df t.ratio p.value

## setosa - versicolor 0.658 0.06793755 147 9.685 <.0001

## setosa - virginica 0.454 0.06793755 147 6.683 <.0001

## versicolor - virginica -0.204 0.06793755 147 -3.003 0.0088

##

## P value adjustment: tukey method for comparing a family of 3 estimatesNote that pairs() automatically incorporates an adjustment to the p-value based on the number of treatments! But if this output is not easy for you to interpret we can also see it graphically:

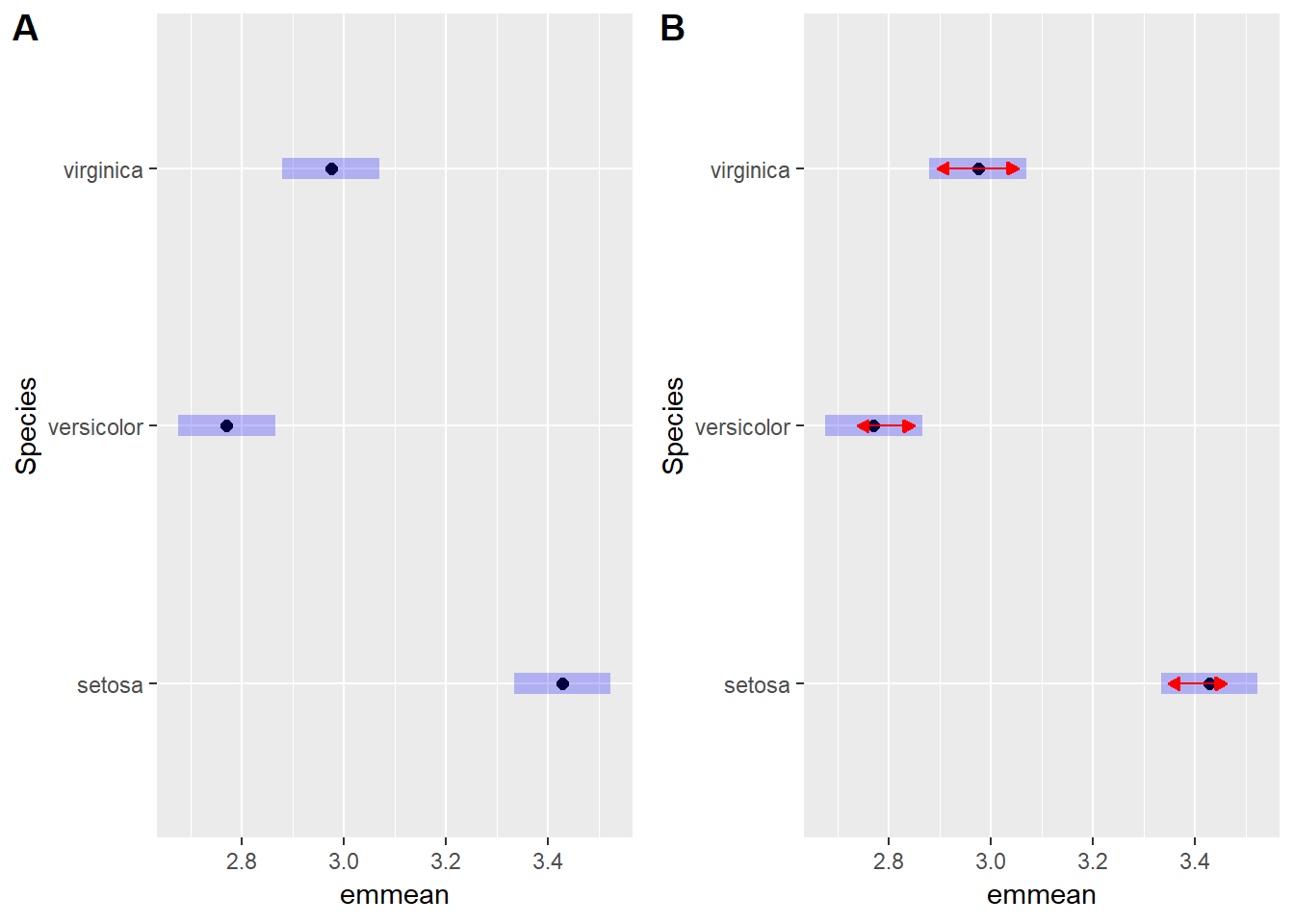

irisAov <- aov(Sepal.Width ~ Species, data = iris)

irisEmm <- emmeans(irisAov, ~ Species)

## this just plots the means and the confidence intervals

irisWithout <- plot(irisEmm)

## this incorporates a red arrow. If the arrows overlap then the means are not different. Verginica and Versicolor are somewhat similar (p = 0.0088) but their arrows do not overlap and so they are regarded as different.

irisWith <- plot(irisEmm, comparisons = TRUE)

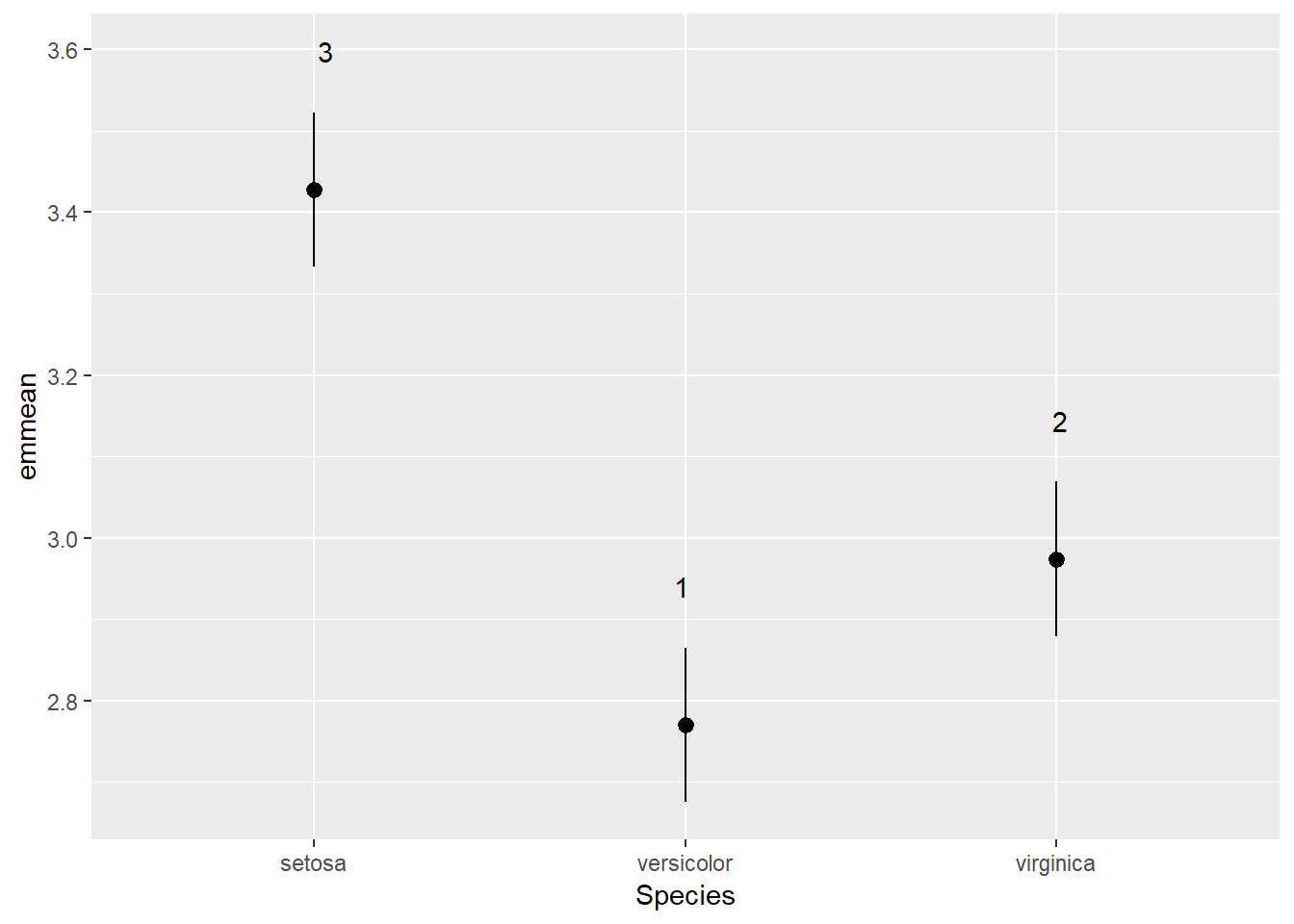

You can also get this output with number groupings. emmeans has a function called CLD() which assigns groups of treatments sharing similar means the same number:

irisAov <- aov(Sepal.Width ~ Species, data = iris)

irisEmm <- emmeans(irisAov, ~ Species)

irisCld <- CLD(irisEmm)

irisCld## Species emmean SE df lower.CL upper.CL .group

## versicolor 2.770 0.0480391 147 2.675064 2.864936 1

## virginica 2.974 0.0480391 147 2.879064 3.068936 2

## setosa 3.428 0.0480391 147 3.333064 3.522936 3

##

## Confidence level used: 0.95

## P value adjustment: tukey method for comparing a family of 3 estimates

## significance level used: alpha = 0.05Clearly all species have drastically different sepal widths. This is a fairly nice output but suppose we wanted to plot it with ggplot2? How would we do that. Well luckily cld() saves the above table as a neat data.frame which we can just dive into with ggplot2:

irisAov <- aov(Sepal.Width ~ Species, data = iris)

irisEmm <- emmeans(irisAov, ~ Species)

irisCld <- cld(irisEmm)

irisCld## Species emmean SE df lower.CL upper.CL .group

## versicolor 2.770 0.0480391 147 2.675064 2.864936 1

## virginica 2.974 0.0480391 147 2.879064 3.068936 2

## setosa 3.428 0.0480391 147 3.333064 3.522936 3

##

## Confidence level used: 0.95

## P value adjustment: tukey method for comparing a family of 3 estimates

## significance level used: alpha = 0.05ggplot(data = irisCld) +

geom_pointrange(aes(x = Species, y = emmean, ymin = lower.CL, ymax = upper.CL)) +

geom_text(aes(x = Species, y = upper.CL + 0.075, label = .group))

Pretty simple hey? No more playing around in PowerPoint to get that right. Everything can be done from right in R. And if you want to be a little more specific with how your labels are positioned these figures are entirely editible as you can export them as a .svg file. Open that up in a free vector editing program called Inkscape and you can move any point or label around. This will be very useful later when we produce some multivariate figures with lots of species and environmental variable labels. So that is the basics of anova in R. But anovas are not the most reliable statistical tool for testing whether the means of treatments are different. The reason for this is that anova contains many assuptions. This means that if we want to ensure our data meet this long list of assumptions then we need to drastically transform our data - something which is challenging to do in itself and then you are left with results based on transformed data which your reader will likely struggle to understand. That is where GLMs (generalised linear models) come into the picture.

Generalised linear models

glm()

The main constraint with our data is the distribution that the residuals follow and the scale of the response variable. glm() is is the function that we use to give more detailed descriptions of how we want our data to be analysed. It constains similar arguments to our previous functions (formula and data) but it adds another key argument (family). This argument allows you to tell glm() what kind of distribution it should use to model the residuals of the response variable. Type ?family to get a quick run down of the different families that are available. In some extreme cases your data might not align towards any of those families (such as when you have lots of zeros on a continuous positive scale). If that happens there are packages that you can load which allow you to modify your distributions to handle this difficult kind of data. GLMs allow you to accurately model:

- normally distributed data - very rare actually, gaussian distribution (if we run a GLM with a gaussian distribution we will basically get an anova).

- proportional data - anything that is bound between zero and one, germination proportions, seed set, propo time spent doing a particular activity, binomial distribution.

- count data - integers greater than one, number of animals spotted, number of plants in a community, poisson distribution.

- positive continuous data - the most common, length of leaves, biomass weights, gamma distribution.

Each distribution family has something called a link function associated with it. This is a transformation method that is used to take your untransformed data and fit it to the distribution that you have chosen. These are normally matched to your family but you can modify them should you wish to. Generally we can keep this at the standard setting. I will not teach you the fundamentals of GLMs here but there are some good resources available which you can draw from:

This Methods in Ecology and Evolution article.

This TREE article on GLMMs.

This YouTube channel and this YouTube channel if you prefer on videos.

Gamma() family

To carry out our analyses we are going to need to access some data contained within another package. Please download and install car. Load this package and the carData package. The datasets that we will use here are contained within the latter package. Type View(carData::Salaries) to pull up and expect the dataset. Type ?carData::Salaries to pull up the dataset’s help file.

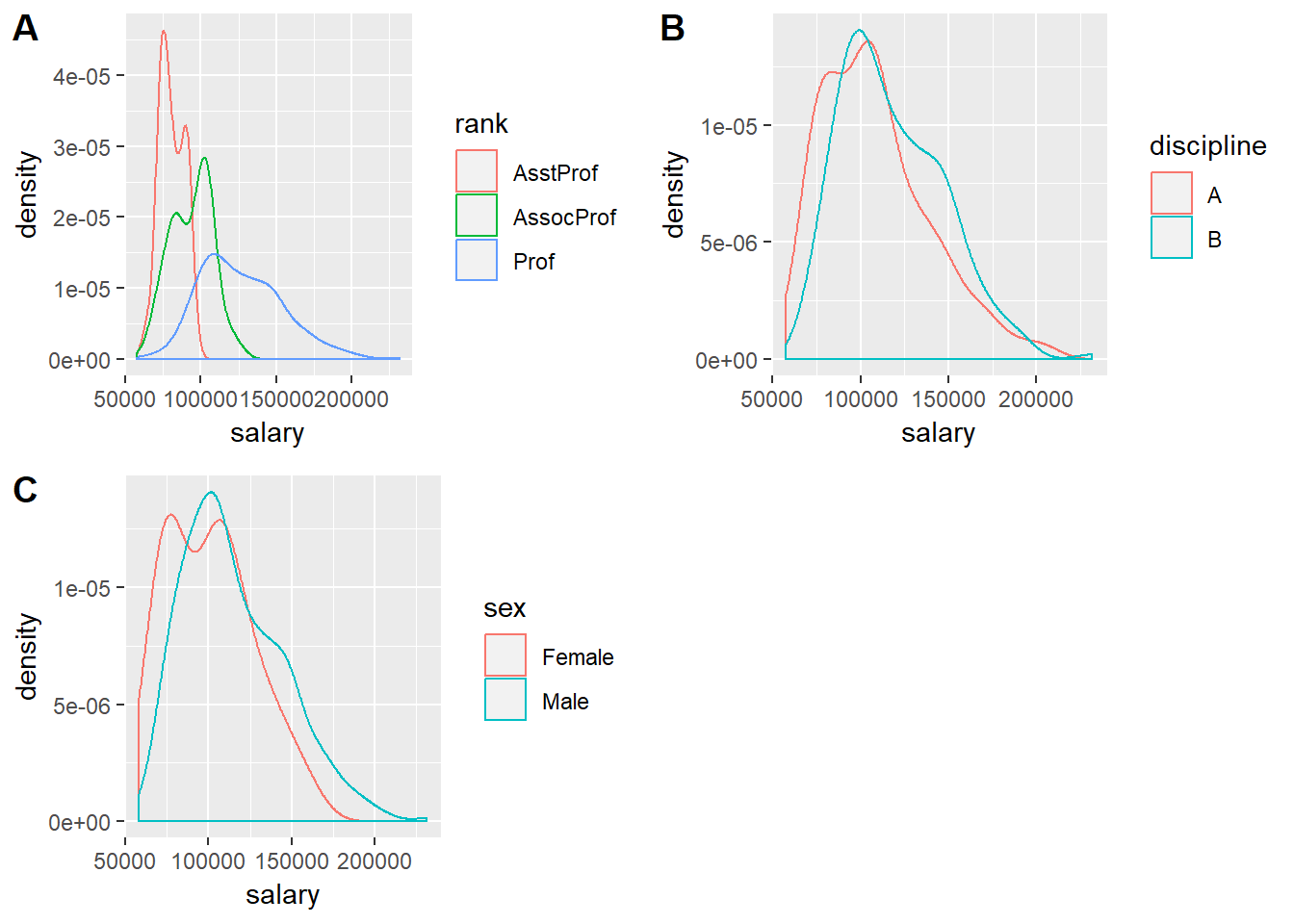

sal.rank <- ggplot(data = Salaries) +

geom_density(mapping = aes(x = salary, colour = rank))

sal.disc <- ggplot(data = Salaries) +

geom_density(mapping = aes(x = salary, colour = discipline))

sal.sex <- ggplot(data = Salaries) +

geom_density(mapping = aes(x = salary, colour = sex))

cowplot::plot_grid(sal.rank, sal.disc, sal.sex, labels = "AUTO")

Not quite a normal distribution. These data follow a gamma distribution. There are a few other variables in this dataset but for now we will just stick with categorical factors. Now that we have called up cars (the package name stands for “Companion to Applied Regression”) we can use its Anova() function instead of the basic anova():

salariesGLMgamma <- glm(salary ~ rank*discipline*sex, data = Salaries, family = Gamma())

Anova(salariesGLMgamma)## Analysis of Deviance Table (Type II tests)

##

## Response: salary

## LR Chisq Df Pr(>Chisq)

## rank 368.02 2 < 2.2e-16 ***

## discipline 40.56 1 1.906e-10 ***

## sex 1.93 1 0.16490

## rank:discipline 6.01 2 0.04949 *

## rank:sex 0.52 2 0.77255

## discipline:sex 1.77 1 0.18343

## rank:discipline:sex 0.64 2 0.72793

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1We can also use anova() but then we would also have to tell the function what test to run. If we do not do this then it will not compute the test statistic and the associated p-value.

salariesGLMgamma <- glm(salary ~ rank*discipline*sex, data = Salaries, family = Gamma())

anova(salariesGLMgamma)## Analysis of Deviance Table

##

## Model: Gamma, link: inverse

##

## Response: salary

##

## Terms added sequentially (first to last)

##

##

## Df Deviance Resid. Df Resid. Dev

## NULL 396 27.044

## rank 2 12.4840 394 14.560

## discipline 1 1.3819 393 13.178

## sex 1 0.0741 392 13.104

## rank:discipline 2 0.2335 390 12.871

## rank:sex 2 0.0129 388 12.858

## discipline:sex 1 0.0603 387 12.798

## rank:discipline:sex 2 0.0216 385 12.776anova(salariesGLMgamma, test = "Chisq")## Analysis of Deviance Table

##

## Model: Gamma, link: inverse

##

## Response: salary

##

## Terms added sequentially (first to last)

##

##

## Df Deviance Resid. Df Resid. Dev Pr(>Chi)

## NULL 396 27.044

## rank 2 12.4840 394 14.560 < 2.2e-16 ***

## discipline 1 1.3819 393 13.178 1.901e-10 ***

## sex 1 0.0741 392 13.104 0.14022

## rank:discipline 2 0.2335 390 12.871 0.03246 *

## rank:sex 2 0.0129 388 12.858 0.82708

## discipline:sex 1 0.0603 387 12.798 0.18343

## rank:discipline:sex 2 0.0216 385 12.776 0.72793

## ---

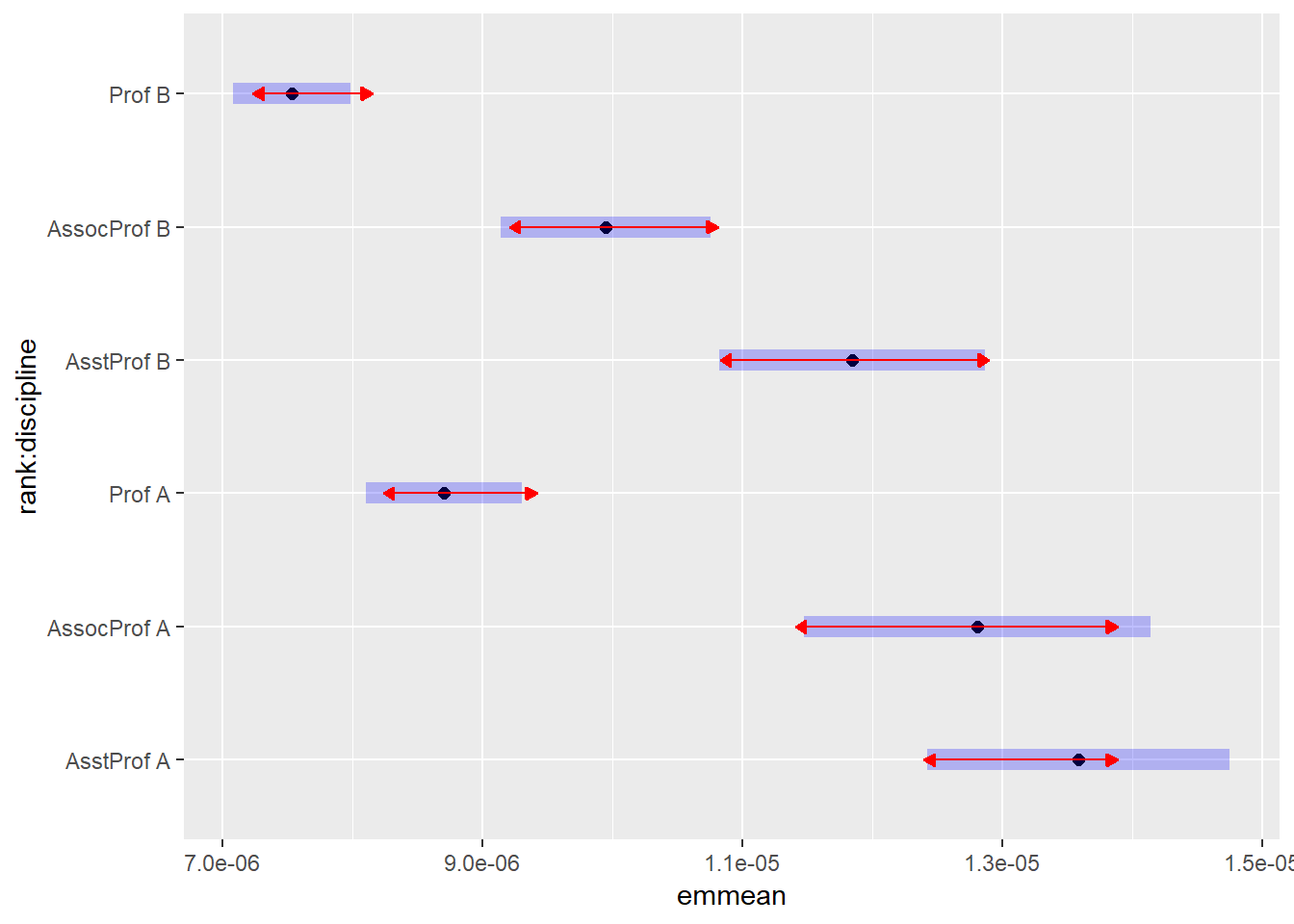

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1Anova() is a more accurate method than anova(). Now that we have our anova table we can see that both rank and discipline but not sex affect the salaries of professors. But there is also an interaction effect between rank and discipline. The effect of rank is not consistant across disciplnes. Let us have a closer look at this using emmeans:

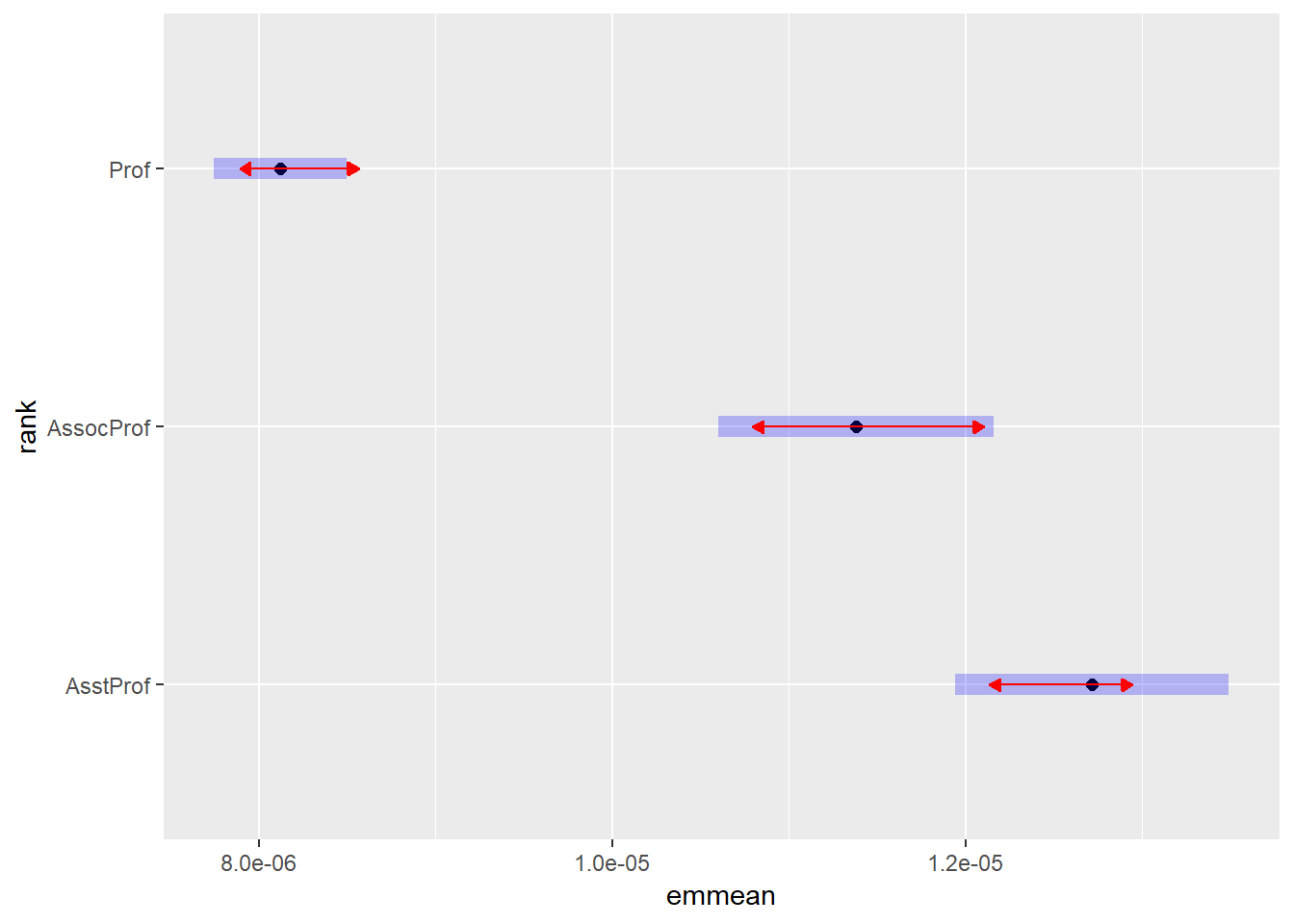

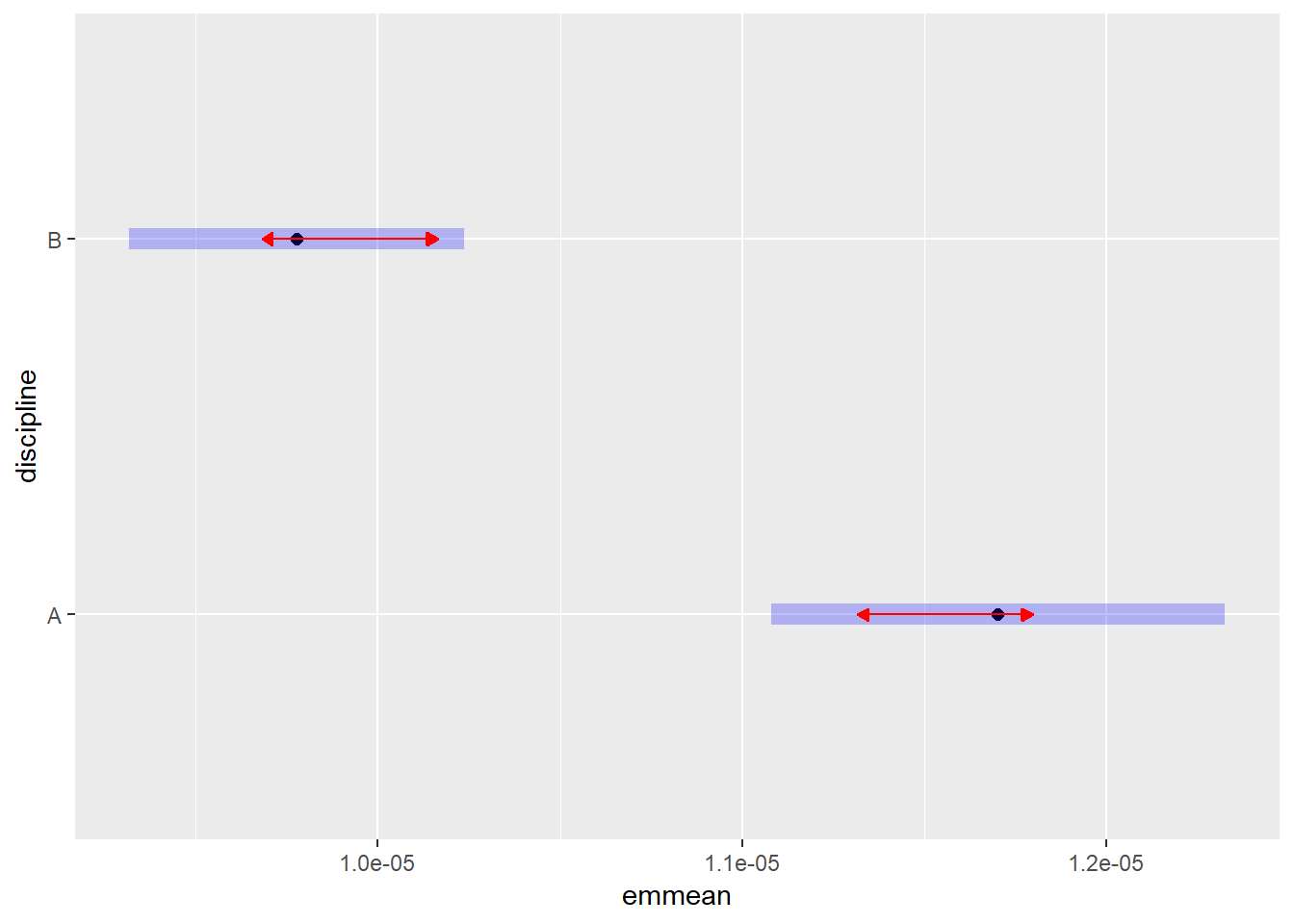

salariesGLMgamma <- glm(salary ~ rank*discipline*sex, data = Salaries, family = Gamma())

plot(emmeans(salariesGLMgamma, ~rank), comparisons = TRUE)## NOTE: Results may be misleading due to involvement in interactions

plot(emmeans(salariesGLMgamma, ~discipline), comparisons = TRUE)## NOTE: Results may be misleading due to involvement in interactions

plot(emmeans(salariesGLMgamma, ~rank*discipline), comparisons = TRUE)## NOTE: Results may be misleading due to involvement in interactions

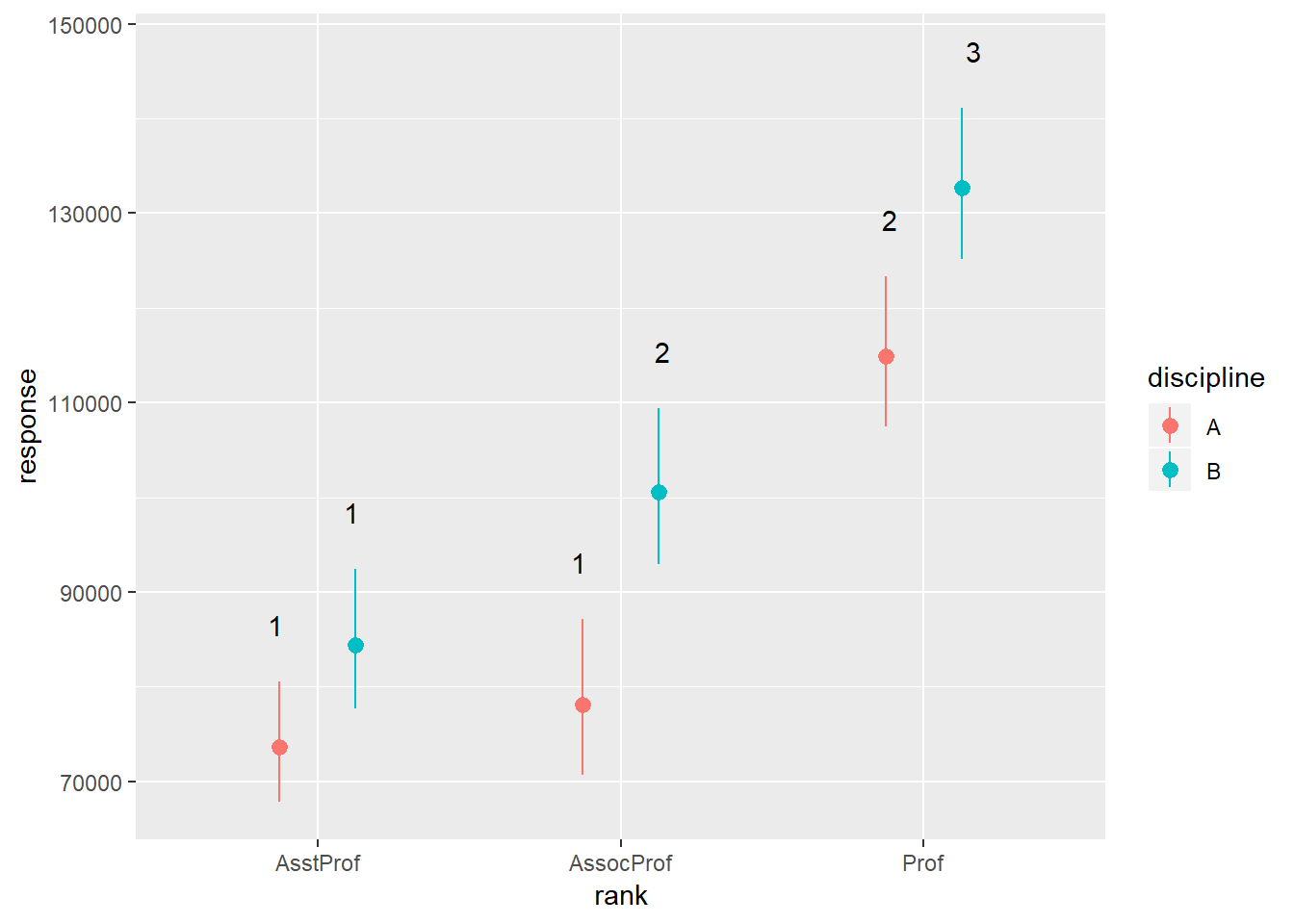

And now lets plot the interaction with groupings in ggplot:

rankDiscCld <- CLD(emmeans(salariesGLMgamma, ~rank*discipline, type = "response"))## NOTE: Results may be misleading due to involvement in interactionsggplot(rankDiscCld) +

geom_pointrange(mapping = aes(x = rank, y = response, ymin = asymp.LCL, ymax = asymp.UCL, colour = discipline), position = position_dodge(0.5)) +

geom_text(mapping = aes(x = rank, y = asymp.UCL + 6000, group = discipline, label = .group), position = position_dodge(0.5))

With geom_text() we need to tell ggplot how to group the different labels - we know that we must go with rank on the x-axis. Then we group by discipline and add a bit of space between the label and the upper confidence interval bar. But let’s see about making this figure “publication quality” using the theme() function:

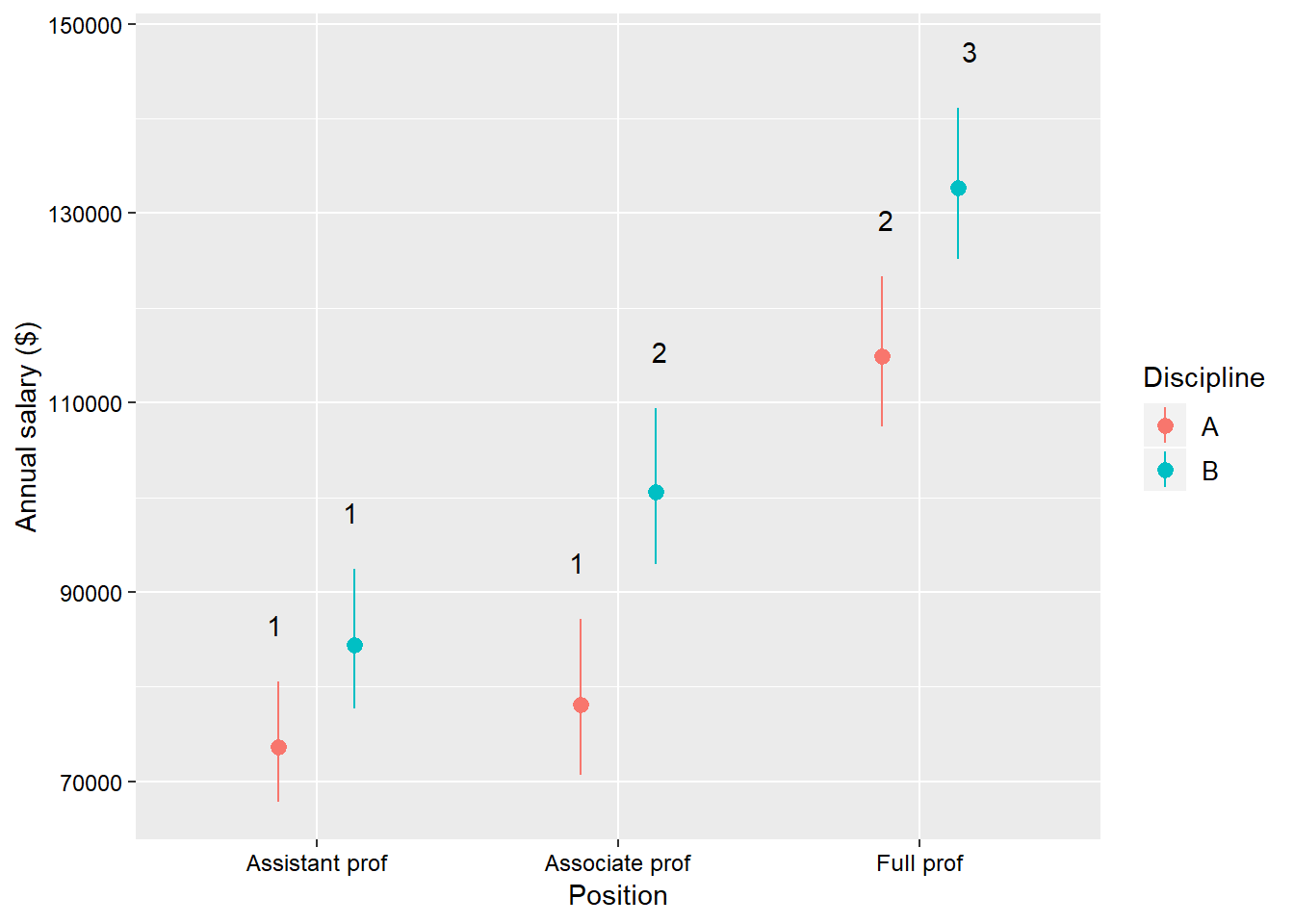

salaries_GLM_gamma <- glm(salary ~ rank*discipline*sex,

data = Salaries,

family = Gamma())

rank_Disc_Cld <- cld(emmeans(salariesGLMgamma,

~rank*discipline,

type = "response"))## NOTE: Results may be misleading due to involvement in interactionsggplot(rank_Disc_Cld) +

geom_pointrange(mapping = aes(x = rank,

y = response,

ymin = asymp.LCL,

ymax = asymp.UCL,

colour = discipline),

position = position_dodge(0.5)) +

geom_text(mapping = aes(x = rank,

y = asymp.UCL + 6000,

group = discipline,

label = .group),

position = position_dodge(0.5)) +

theme(axis.title = element_text(size = 11,

colour = "black"),

axis.text = element_text(size = 9,

colour = "black"),

legend.background = element_blank(),

legend.text = element_text(size = 10,

colour = "black"),

legend.title = element_text(size = 11,

colour = "black")) +

labs(x = "Position",

y = "Annual salary ($)",

colour = "Discipline") +

scale_x_discrete(labels = c("Assistant prof",

"Associate prof",

"Full prof"))

The one new function that we have used here is scale_x_discrete() which allows you to make fine adjustments to the x-axis - in this case the labels for the tick marks.

binomial() family

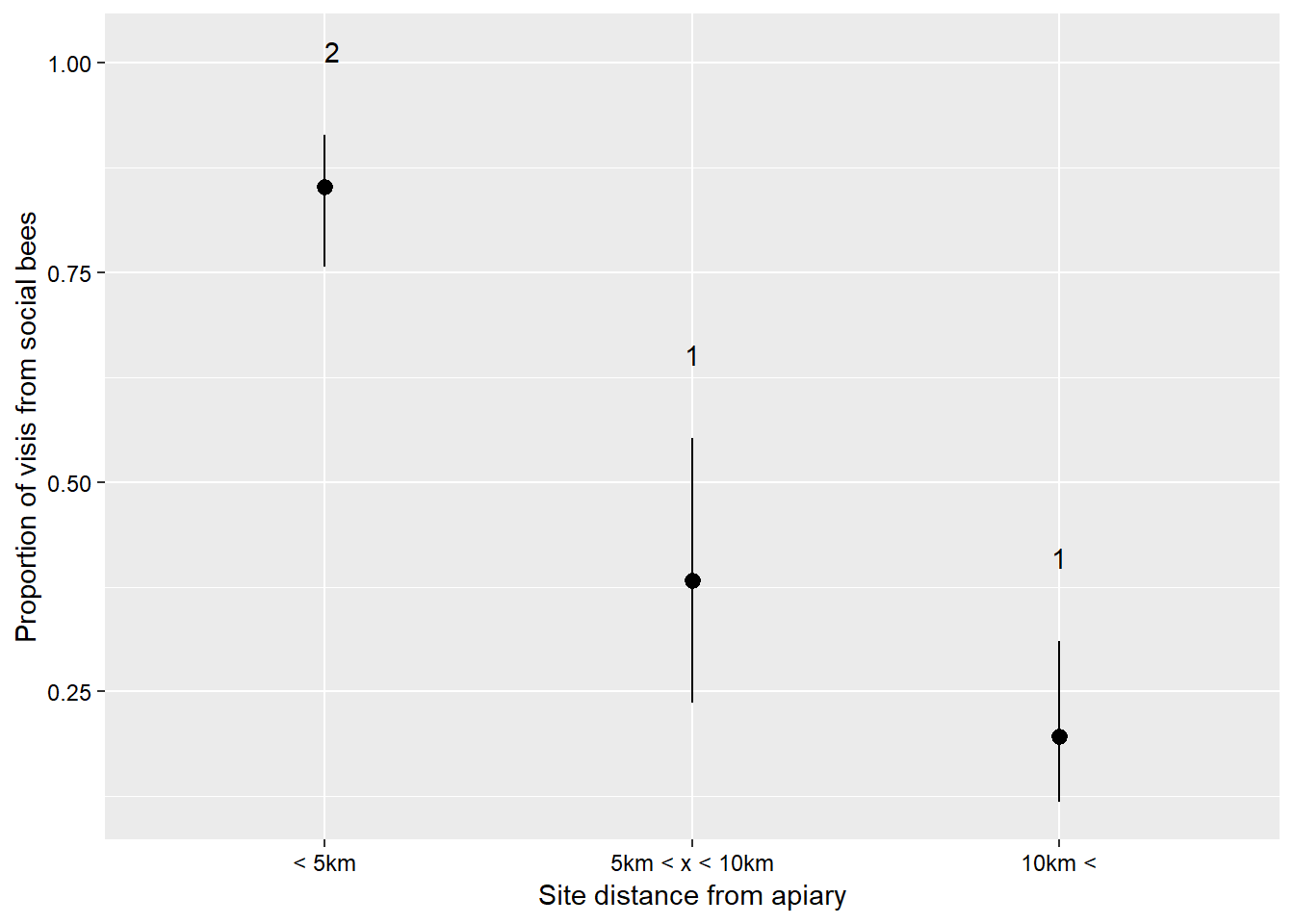

I will quickly go through some details regarding the binomial distribution because it is a powerful distribution that is probably under-utilised in the natural sciences. This distribution describes data which have a ‘success/failure’ kind of response. The key advantage of this distribution is that the y side of the formula can accept several ways of presenting data. The data can be in the form of proportions (values between zero and one), binary responses (ones or zeros), or successes and failures from which proportions can be calculated (suppose 40 seeds were planted, 28 germinate and 12 do not, we would input the successes as 28 and the failures as 12). The last method is the most powerful I think because it incorporates a weighting (40 seeds for that recording) as well as the proportion. Just putting 0.7 in (28/40) does not tell the analysis how many individuals made up that data point. If there are several different numbers of seeds that are planted then the analysis takes those different numbers into consideration. Let us see this in action. We are going to use a dataset which describes the number of visits to sunflowers over five minutes at sites which are at different distances away from an Apis melifera apiry. You can find the data here visitSoc describes the number of visits from social bees - A. melifera, and visitSol describes visits from solitary bees. site describes each site’s distance from the apiary, and area describes the size of the area that the site occupies.

sunflowerGlm <- glm(cbind(visitSoc, visitSol) ~ site, data = sunflower, family = binomial())

Anova(sunflowerGlm)## Analysis of Deviance Table (Type II tests)

##

## Response: cbind(visitSoc, visitSol)

## LR Chisq Df Pr(>Chisq)

## site 71.787 2 2.581e-16 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1sunflowerSiteCld <- CLD(emmeans(sunflowerGlm, ~site, type = "response"))

ggplot(sunflowerSiteCld) +

geom_pointrange(mapping = aes(x = site,

y = prob,

ymin = asymp.LCL,

ymax = asymp.UCL),

position = position_dodge(0.5)) +

geom_text(mapping = aes(x = site,

y = asymp.UCL + 0.1,

label = .group),

position = position_dodge(0.5)) +

theme(axis.title = element_text(size = 11,

colour = "black"),

axis.text = element_text(size = 9,

colour = "black"),

legend.background = element_blank(),

legend.text = element_text(size = 10,

colour = "black"),

legend.title = element_text(size = 11,

colour = "black")) +

labs(x = "Site distance from apiary",

y = "Proportion of visis from social bees") +

scale_x_discrete(labels = c("< 5km",

"5km < x < 10km",

"10km <"))